Section: Partnerships and Cooperations

European Initiatives

FP7 & H2020 Projects

D

-

Participants: Adrien Bousseau, Yulia Gryaditskaya, Bastien Wailly.

-

Abstract. Designers draw extensively to externalize their ideas and communicate with others. However, drawings are currently not directly interpretable by computers. To test their ideas against physical reality, designers have to create 3D models suitable for simulation and 3D printing. However, the visceral and approximate nature of drawing clashes with the tediousness and rigidity of 3D modeling. As a result, designers only model finalized concepts, and have no feedback on feasibility during creative exploration. Our ambition is to bring the power of 3D engineering tools to the creative phase of design by automatically estimating 3D models from drawings. However, this problem is ill-posed: a point in the drawing can lie anywhere in depth. Existing solutions are limited to simple shapes, or require user input to ”explain” to the computer how to interpret the drawing. Our originality is to exploit professional drawing techniques that designers developed to communicate shape most efficiently. Each technique provides geometric constraints that help viewers understand drawings, and that we shall leverage for 3D reconstruction.

Our first challenge is to formalize common drawing techniques and derive how they constrain 3D shape. Our second challenge is to identify which techniques are used in a drawing. We cast this problem as the joint optimization of discrete variables indicating which constraints apply, and continuous variables representing the 3D model that best satisfies these constraints. But evaluating all constraint configurations is impractical. To solve this inverse problem, we will first develop forward algorithms that synthesize drawings from 3D models. Our idea is to use this synthetic data to train machine learning algorithms that predict the likelihood that constraints apply in a given drawing. In addition to tackling the long-standing problem of single-image 3D reconstruction, our research will significantly tighten design and engineering for rapid prototyping.

PhySound

-

Abstract: Sound is as important as visuals in modern media (films, video-games). Yet, little effort has been devoted to the rendering of sound from digital environments, compared to the phenomenal advances of visual rendering. Sound is added to virtual scenes through the ad-hoc edition of real sounds, requiring recording phases and manual synchronization between recorded clips and visuals, while yielding limited and repetitive sounds. This project addresses this problem by generating sounds from virtual environments through physically based simulation, and focuses on a challenging family of objects: thin shells. Characteristic thin shell sounds include tearing cloth and paper, crushing cans and plastic bottles, and crumpling a piece of paper and a plastic bag. The high quality, offline simulation and rendering of thin shell sound will be addressed through a set of modeling approaches and computational tools (model reduction, high frequency bandwidth extension and pre-computed sound databases), while the real-time but computationally constrained sound rendering will rely on data-driven approaches. This research will considerably widen the number of real life object sounds that can be digitally generated, and will contribute to the young research field of physically based sound rendering, which has the potential of becoming the next key technology of the media industry.

EMOTIVE

-

Partner: Diginext (FR), ATHENA (GR), Noho (IRL), U Glasgow (UK), U York (UK)

-

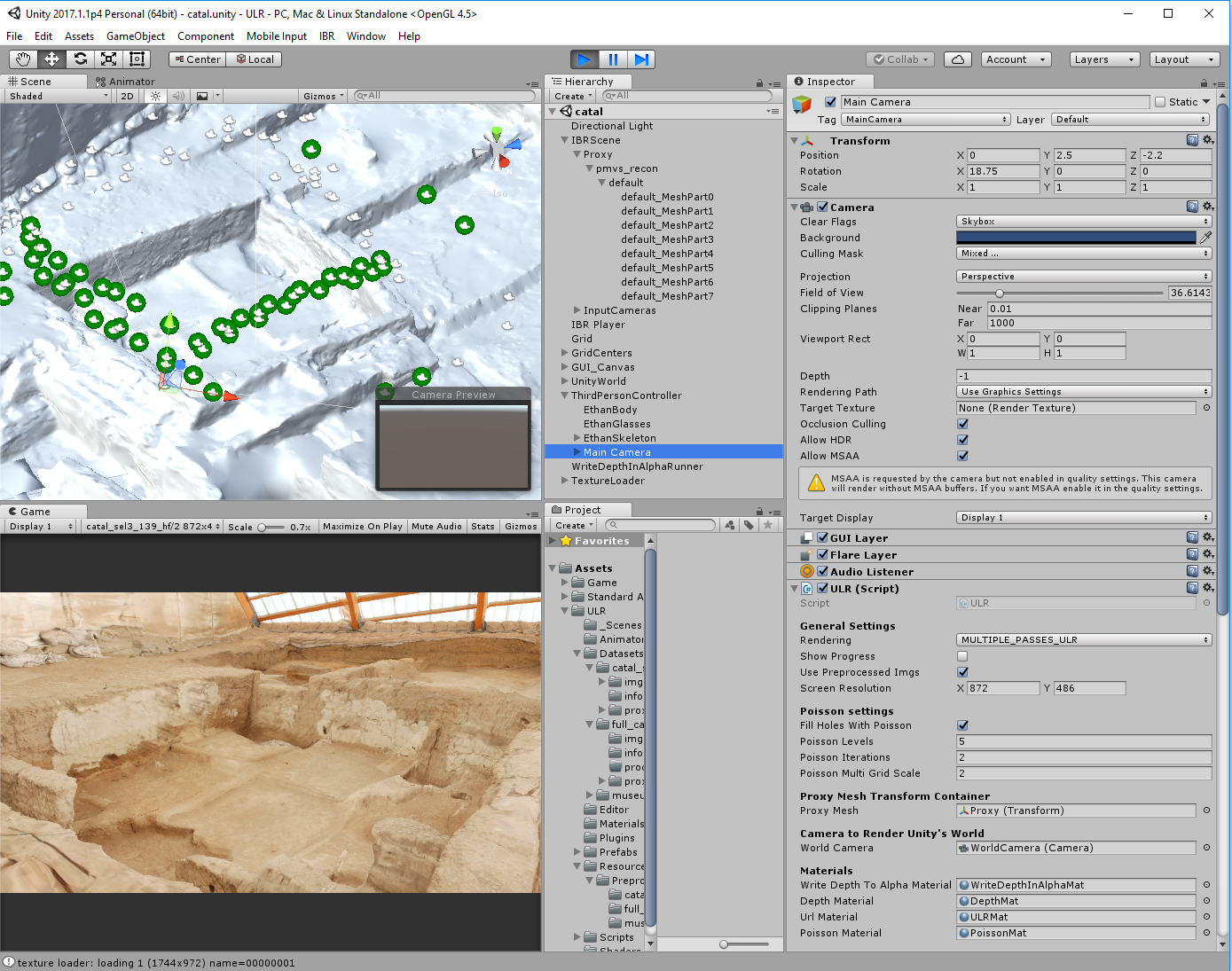

Abstract: Storytelling applies to nearly everything we do. Everybody uses stories, from educators to marketers and from politicians to journalists to inform, persuade, entertain, motivate or inspire. In the cultural heritage sector, however, narrative tends to be used narrowly, as a method to communicate to the public the findings and research conducted by the domain experts of a cultural site or collection. The principal objective of the EMOTIVE project is to research, design, develop and evaluate methods and tools that can support the cultural and creative industries in creating Virtual Museums which draw on the power of `emotive storytelling'. This means storytelling that can engage visitors, trigger their emotions, connect them to other people around the world, and enhance their understanding, imagination and, ultimately, their experience of cultural sites and content. EMOTIVE does this by providing the means to authors of cultural products to create high-quality, interactive, personalized digital stories. GRAPHDECO contributes by developing novel image-based rendering techniques to help museum curators and archeologists provide more engaging experiences, and in particular for the offsite experience for one of the sites (see Fig. 11).